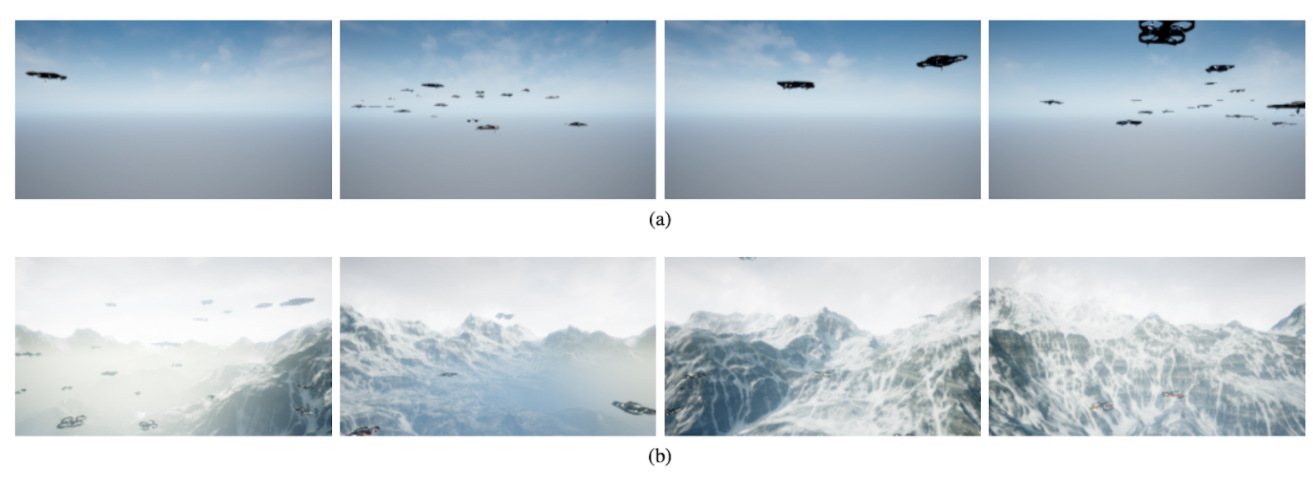

A recent paper by members of the DCIST alliance develops a perception-action-communication loop framework using Vision-based Graph Aggregation and Inference (VGAI). This multi-agent decentralized learning-to-control framework maps raw visual observations to agent actions, aided by local communication among neighboring agents. The framework is implemented by a cascade of a convolutional and a graph neural network (CNN / GNN), addressing agent-level visual perception and feature learning, as well as swarm-level communication, local information aggregation and agent action inference, respectively. By jointly training the CNN and GNN, image features and communication messages are learned in conjunction to better address the specific task. The researchers use imitation learning to train the VGAI controller in an offline phase, relying on a centralized expert controller. This results in a learned VGAI controller that can be deployed in a distributed manner for online execution. Additionally, the controller exhibits good scaling properties, with training in smaller teams and application in larger teams. Through a multiagent flocking application, the researchers demonstrate that VGAI yields performance comparable to or better than other decentralized controllers, using only the visual input modality (even with visibility degradation) and without accessing precise location or motion state information.

Video: https://www.dropbox.com/sh/adp76y0ro1jb5f2/AADO4xhvkcCrUfIlOGKACDkla?dl=0

Paper: https://www.dcist.org/2022/01/18/learning-decentralized-controllers-with-graph-neural-networks-2/